How to build a secure and resilient website in 2024

As digitalization keeps increasing in our society the amount of security threats keeps increasing. Having a secure site is important not only to keep hackers from accessing your data, but it's also important to keep the visitors safe. In this blog post we'll go through some important aspects that will keep your site secure.

You've probably heard about systems and sites getting hacked, and data breaches where lots of passwords are stolen. The trend is clear: digital attacks keep increasing and you need to step up your game to reduce the risk of being affected. There are many different attack vectors and aspects to consider, to name a few:

- Trying to log in with existing accounts to steal data or to change content.

- Injecting JavaScript to a site that can be used for automatically doing actions as a site visitor or system user.

- Trying to take down the site with a Denial of Service Attack (DDOS).

- Taking over the network to fool a visitors with changes to the site or even switching out an entire site with a fake copy.

Use a CDN and a WAF to guard against DDOS attacks

Usage of a CDN (Content Delivery Network) and a WAF (Web Application Firewall) can help to handle large amount of requests and to prevent malicious attacks such as DDOS attacks. A DDOS (Distributed Denial of Service) attack is when an attacker uses a bot network to create lots of traffic to a web site with the intention to overload the servers so that they stop responding to real users trying to access the site. A CDN (Content Delivery Network) is a globally distributed network with high capacity that sits in between the web servers that will offload a of of traffic from the web servers, since it can delivered cached versions of resources needed for the page, usually static resources such as images, JavaScript and CSS.

A WAF or web application firewall helps protect web applications by filtering and monitoring HTTP traffic between a web application and the Internet. It typically protects web applications from attacks such as cross-site forgery, cross-site-scripting (XSS), file inclusion, and SQL injection, among others.

Keeping your dependencies up to date

A modern web site is built on top of lots of dependencies, usually delivered in something called “packages”. Even a platform like Optimizely releases its software in packages where some packages get updated every month, while others stay pretty stable. If a security issue is found it’s usually fixed and released in a new package version. However, unless you upgrade your packages as they get released, a potentially disclosed vulnerability might then be available on your solution and ready to be exploited by hackers.

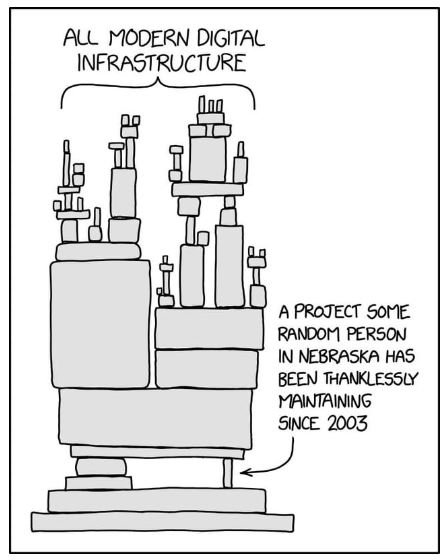

An illustration of a modern digital infrastructure with potential vulnerabilities. Image from https://xkcd.com/2347/

Use an updated secure layer of transport

You’ve probably heard the term SSL, or perhaps HTTPS which is kind of standard today after Google Chrome forced sites to use this to not be labeled as “not secure” in 2018. HTTPS is the implementation of TLS encryption (https://www.cloudflare.com/learning/ssl/transport-layer-security-tls/) on top of HTTP that prevents so called “man in the middle” attacks where someone can tap the network and alter the content sent over the internet.

TLS comes in different versions, ranging from 1.0 to the latest 1.3 which has further enhancements. Versions 1.0 and 1.1 are no longer supported in Chrome.

Use HSTS

HSTS, or HTTP Strict-Transport-Security, informs the browser that any future attempts to access the site should be made using HTTPS. Mozilla has a good explanation of why this is important:

“You log into a free Wi-Fi access point at an airport and start surfing the web, visiting your online banking service to check your balance and pay a couple of bills. Unfortunately, the access point you're using is actually a hacker's laptop, and they're intercepting your original HTTP request and redirecting you to a clone of your bank's site instead of the real thing. Now your private data is exposed to the hacker.

Strict Transport Security resolves this problem; as long as you've accessed your bank's website once using HTTPS, and the bank's website uses Strict Transport Security, your browser will know to automatically use only HTTPS, which prevents hackers from performing this sort of man-in-the-middle attack.”

Use automated code analyzer tools

Code, as a natural language like English, is very fluent and can be written in many different ways. A code analyzer works similarly as a spell checker: it checks for patterns that should be avoided and can thus automate the process of avoiding writing code that is bad for different reasons, including code that might impose security concerns.

Enforce code review policies

A code review process is like proofreading, where other developers review the code changes that has been written by a developer before merging the change into the code used that will be used for the solution. In this process, the reviewing developer(s) get a chance to take a look at the proposed changes and thus get more people to review the changes for potential bugs or security issues.

Deliver pages from infrastructure that can handle high amounts of traffic

With traditional hosting, you had to dimension the hardware to handle peak traffic. With the introduction of public clouds about 15 years ago, this is no longer something you have to consider in the same way as cloud providers offer auto scaling. Having a CDN that delivers parts of the resources needed for a site, like images, scripts etc. is a good way to keep down the amount of requests that the server(s) needs to handle, and it also have the positive side effect to speed up the website.

Use an automated penetration testing tool

In a world where sites are continuously updated with new code, and new vulnerabilities are constantly found and exploited, having rigorous review processes are not enough. An automated PEN testing tool while frequently attempt to look for known vulnerabilities in a site and send reports with potential findings to the team maintaining the site. I tool like this is priceless since it can find both new vulnerabilities that have been introduced to the site with recent deploys, as well as already existing vulnerabilities due to the tests being constantly updated as new vulnerabilities become known. At Epinova, several of our clients are using https://detectify.com/ as their PEN-testing tool.

Use HTTP headers such as Content Security Policy

A header is a set of keys and values that are sent for each request from a web server. Although headers are not visible, these are used by the user’s browser to define different behaviors. There are a few security headers that should always be in place, but it’s quite common that they are missing.

The most complex header is the Content Security Policy header which sets rules of which domains the browser is allowed to use for resources such as images and videos but also JavaScript and CSS.

A lack of an existing, and properly defined CSP header might open up vulnerabilities where for instance malicious script from an unwanted domain is loaded and executed in the visitor’s browser. We’re also seen scenarios where the implementation of a CSP header has prevented sending of undesired tracking data containing personal data.

It’s easy to get a quick overview of the level of security headers for your existing site in this free online tool: https://securityheaders.com/

You can also read more about the Content Security Policy in this blog post: https://www.epinova.se/en/blog/2023/Do-you-take-the-security-of-your-website-seriously/

Prevent Cross-Site Request Forgery (XSRF/CSRF)

Cross-site request forgery is an attack against web-hosted apps whereby a malicious web app can influence the interaction between a client browser and a web app that trusts that browser.

These attacks are possible because web browsers send some types of authentication tokens automatically with every request to a website. This form of exploit is also known as a one-click attack or session riding because the attack takes advantage of the user's previously authenticated session. Cross-site request forgery is also known as XSRF or CSRF.

When developing a site, it's important to guard against XSRF/CSRF attacks. At Epinova we work with Microsofts .NET framework which has highly integrated support to include protection against this.

Ensure security practices for database backups

Having a database backup that contains sensitive information will pose a risk and backups should be handled with care. There should be well defined procedures for where backups are stored, and to regularly remove backups that are no longer needed.

Another good practice to have is to remove sensitive information from system environments where access might be less strict than the production environment.

At Epinova, we usually use Optimizely DXP for our clients, which comes with three different environments (production, preproduction/stage and integration/test). It's simple to automatically copy content from the production environment down to the other environments, which is great since testing is usually much more effective with real content.

However, when the database is copied between environments which might have more users that can access it, it's important to remove sensitive content from the database. Epinova has developed a tool to handle this for our clients called EnvironmentSynchronizer which keeps track of when a database is copied between environments, and gives an option to execute code that can alter/remove sensitive content.

Use a Password Manager for managing site accounts

Regardless if you are using Single Sign On for your administrators and editors, or have separate accounts for you site it's important that you handle your passwords with care. Having a password like "12345" or "DonaldDock45" will quickly be breached in a so called brute force attack where an attacker uses a computer to automatically. A password manager will help you to create and maintain a complex password without you having to remember it.

Also, having a complex password that you reuse between site is not to recommend since it's enough that one of the sites where you use the password is breached. An attacker can then use the list of users/passwords to try and log in with other sites.

Therefore, passwords should always be unique per site. A password manager will also inform you about sites that have been breached, to make sure to check this and update these passwords regularly. You can also use the site https://haveibeenpwned.com/ to see if your email address have been part of any data breaches with stolen usernames and passwords.

Use Single Sign On or remove old accounts regularly

The beauty with using SSO for authentication is that deactivating an account centrally will make sure that the user (or a hacker) can't log into the site anymore. If you use local accounts, make sure that you remove old accounts frequently as they are no longer needed to avoid having an account that could potentially be used to access the site.

Summary

Keeping a site resilient to high amount of traffic and secure against hackers can be challenging, but being aware of what threats exists and what practices should be enforced helps you keeping your site running in a secure way.

I'd like to say thanks to my colleague Emil Ekman as well as my former colleague Steve Celius for good feedback to the blog post.

Vi vill gärna höra vad du tycker om inlägget